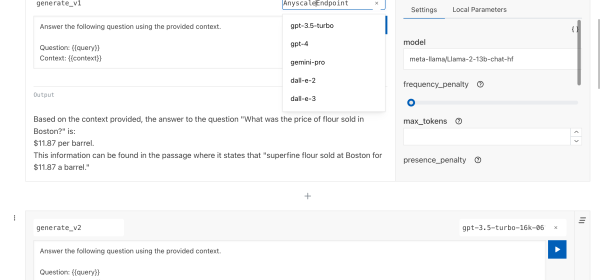

Sentiment analysis, a vital aspect of NLP, involves studying opinions and emotions in text. Large Language Models (LLMs), like GPT and BERT, have enhanced sentiment analysis by understanding context and subtleties, enabling applications in business, public opinion analysis, and mental health. Challenges include biases and privacy concerns. Future developments aim to enhance accuracy and fairness. Practical example provided.