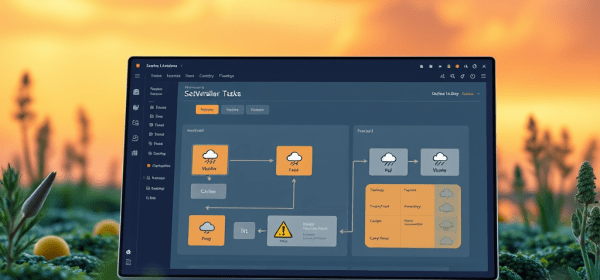

A new breed of organizations is harnessing AI throughout their operations to enhance efficiency and drive innovation. Microsoft Marketplace serves as a key resource, offering a vast range of AI tools and models, enabling businesses to adopt, build, or customize AI solutions tailored to their needs, optimizing costs and performance.