If you’ve stayed with me to this, the final blog post in the series, thank you and I hope that it’s been a worthwhile read for you. In this blog post, we describe the dimensions of Autonetics and why they’ve been included as part of the overall concept.

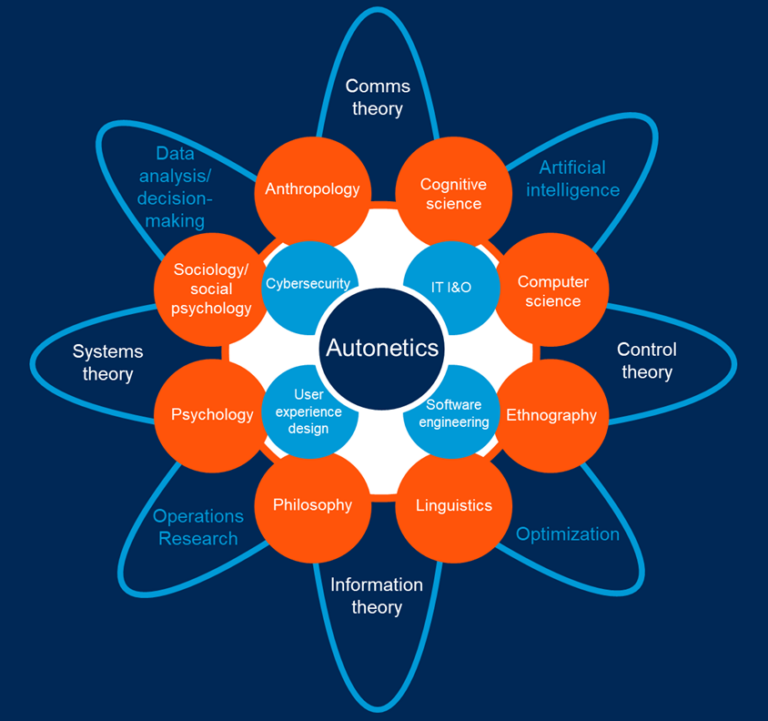

Figure 1. The scope of Autonetics.

The outer layer of the diagram represents the contributions to Autonetics from the field of cybernetics and which provides the theoretical foundation for the overall model as AI concepts literally sprang from the early works of the cybernetics pioneers. Some topics would seem to be obvious (those in blue text) so I’ll forgo any discussion of those, but I wanted to specifically describe how several critical theories contribute to the design of intelligent automation:

- Communication theory: AI systems often involve the processing and interpretation of large amounts of data, and communications theory can help ensure that this data is transmitted and processed accurately, efficiently and by extension, securely.

- Control theory: this is important to the development of AI because it provides a framework for designing intelligent systems that can control and regulate their own behavior. Specifically, control theory emphasizes the importance of feedback loops in regulating the behavior of a system. The concept can be used to design feedback mechanisms in the AI system that enable it to monitor its performance and adjust its behavior accordingly.

- Information theory: deals with quantifying and measuring information specifically in the context of information transmission and storage. It provides techniques for data compression and error correction to ensure information is processed efficiently and correctly including the ability to secure data from malicious attacks.

- Systems theory: systems theory and transfer learning (part of machine learning) are related in the sense that both deal with the transfer of knowledge or information from one system to another. In transfer learning, the knowledge gained from a source task is used to improve the performance of a target task. Similarly, in systems theory, we study how different components of a system interact with each other and how information flows between them.

As we progress towards the center of the diagram, we next encounter the disciplines that support the ability to provide a more human-centric AI system design. I list below what I believe are the most important areas to incorporate for future intelligent automation:

- Anthropology: the study of human societies and cultures. It’s increasingly important for developing AI systems that can interact with humans in culturally appropriate ways. Anthropological concepts can help AI developers understand cultural differences and can provide insights into how AI systems can be designed to respect and accommodate different cultural norms and practices. For example, anthropology can help designers to develop AI systems that are sensitive to cultural nuances.

- Cognitive science: is an interdisciplinary field that combines psychology, neuroscience, linguistics, and philosophy to study how humans perceive, think, and reason. It is relevant to the design of AI systems that need to understand human cognition and behavior. Cognitive science might help in the development of an AI-powered system that can adapt its teaching approach to a user’s learning style. As part of cognitive science, neuroscience studies the structure and function of the nervous system, including the brain, and how it relates to behavior and cognition. It is useful for developing AI systems that mimic human cognitive processes, such as perception, attention, etc.

- Computer Science: probably fairly obvious, but in general, is a core discipline for developing the technical infrastructure for AI systems, such as the algorithms (i.e., a deep learning model for image analysis), data structures and programming languages that enable computers to process and analyze data.

- Ethnography: a qualitative research methodology that involves observing and studying people in their natural environments in order to gain insights into the tasks they perform, the challenges they face, and the tools that they use to do their jobs. In so doing, AI systems can be designed that are more likely to be accepted and adopted by users.

- Linguistics: Linguistics is the study of language and is important for developing AI systems that can understand human language and respond appropriately. Knowledge of languages can help AI developers understand the complexities of human speech, including grammar, syntax, and meaning and can provide insights into how AI systems can be designed to interpret language accurately. For example, it can help to design natural language processing algorithms that can accurately interpret colloquialisms, etc.

- Philosophy: is the study of fundamental questions about existence, knowledge, values, and reality. It is relevant to the design of AI systems in relation to ethical and moral questions including issues related to privacy, consent and data security.

- Psychology: is focused on understanding behavior and mental processes, which can be useful for developing AI systems that can understand and predict human behavior. Leveraging psychological insight might help with the design of, for example, a chatbot that can provide personalized support and resources to users based on their reported symptoms and needs.

- Sociology and social psychology: sociology is the study of social relationships and societal institutions and is important for understanding how AI systems can impact social dynamics and structures such in relation to social inequality, etc. Social psychology, on the other hand, is the study of how people interact with each other in social contexts and is important for developing AI systems that can interact with humans in socially appropriate ways. This discipline can help AI developers understand how people form attitudes and perceptions, how they make decisions, and how they respond to social cues and norms.

Finally, we come to the innermost circles where we have positioned four of the traditional IT disciplines. I don’t think any need explanation other than the fact that these represent the technologies and processes upon which intelligent automation systems will be constructed within the enterprise. Yet, hopefully by now, you will recognize (and agree) that these functional areas are “necessary but not sufficient” in that while they can help to build and operate an intelligent automation system, this doesn’t guarantee that the automation will be “likeable” in the phrasing of Alan Cooper as we mentioned in Part 4.

So, as we continue down the path of increasing the adoption of intelligent automation, systems or applications within our organizations, shame on us if we don’t think first from the point of view of the human. I’m not suggesting that on day 1, that any organization contemplating the deployment of an intelligent system be fully armed with all of the capabilities suggested in the diagram above. I am suggesting, however, that as we seek to increasingly employ these systems in mission critical tasks which also involve humans that we start to think about how we can improve the overall outcome of the “joint cognitive system” by increasing our awareness of the subtleties of the make up of the human race. There isn’t a “one-size-fits-all” UI and so we’ll increasingly need to build intelligent systems that understand us and much as we try to understand them.

I’d like to develop an Autonetics forum of some kind, so if you are a Gartner end user or technology provider client that would like to participate in continuing discussions on this topic, please feel free to reach out to me at [email protected].

Thank you.

Autonetics – The Approach to Intelligent Automation Design (Part 5: The Model)