Generative AI, It Is Nice To Meet You

Knowledge management has been around for decades. The promise of AI innovation in knowledge management lingered on the fringes for years — the application of AI was just outside of possible and not quite ready for prime time. And then into the room walked our new friend, generative AI. It has turned the knowledge management world upside down.

Every day there seems to be a new announcement on advancements and new use cases. Vendors are quick to see the potential and are running to develop an adoption strategy that will take advantage of the capabilities of generative AI and large language models (LLM) and bring it to market as quickly as possible. But what is the potential for bringing knowledge management and generative AI ideas, practices, methodologies, and advancements together? The limitations at the moment seem to be our own thinking.

Agile Knowledge Management And AI

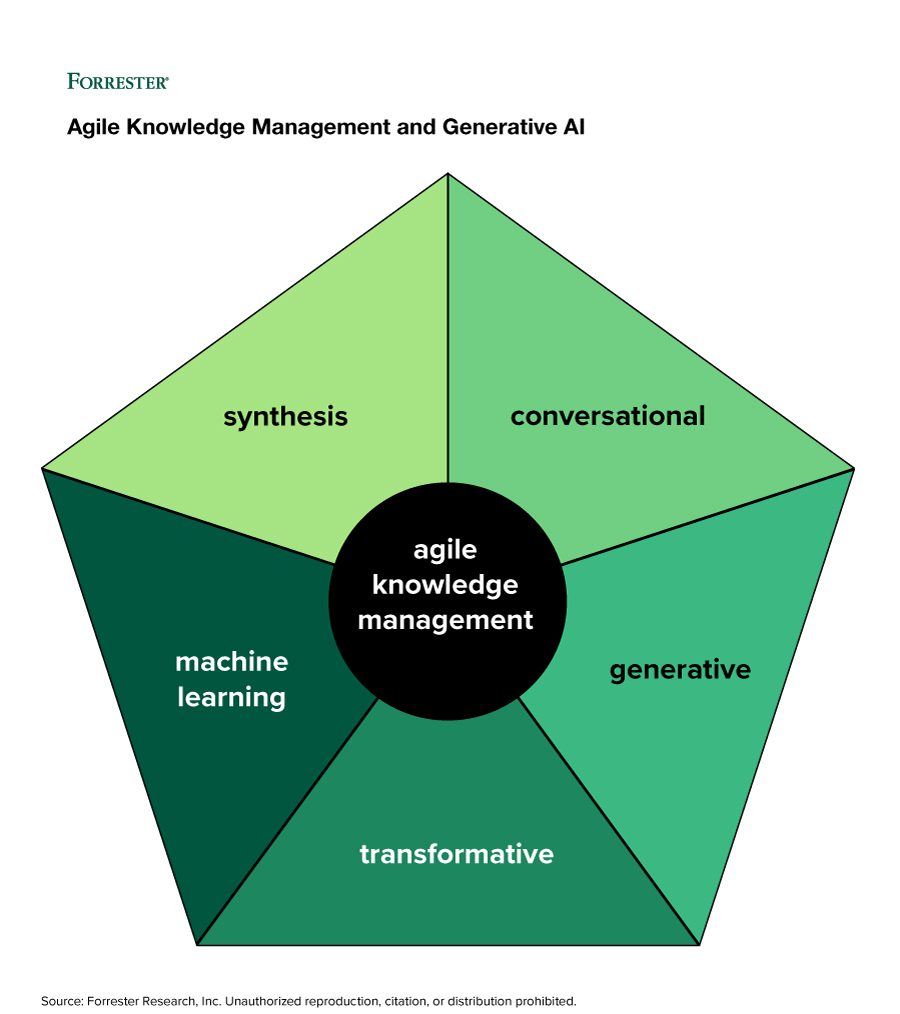

In 2023, the Forrester report on agile knowledge management positioned knowledge management’s success as an agile practice instead of the waterfall approach that organizations have embraced for decades. Modern, resilient operations have organizations embracing agility to drive innovation while simultaneously seeking to eliminate waste, improve productivity, and create value.

Knowledge is at the core of innovation, and enhancing knowledge management practices helps accelerate the flow of ideas and collaboration. Organizations struggle with the time and effort required to capture and maintain knowledge to create a thriving knowledge management practice, however. Moving knowledge management toward agility is why we are excited about the advancements in generative AI capabilities, such as ChatGPT. Generative AI can help drive the success of an agile knowledge management practice in the following ways.

Generative = The Power Of The First Draft

One of the critical tenets of knowledge management is knowledge sharing. When knowledge workers share what they know, others benefit from using that knowledge. There is less rework and better operational efficiencies. Knowledge workers often resist due to several factors, but time constraints are among the most impactful. With generative AI capabilities, knowledge workers can benefit from the power of the first draft. Based upon what is entered into a system of record during a transaction with an end user or customer (ITSM, ESM, CRM, etc.), prior LLM training on ticket data, and prior curated knowledge articles, a new solution can be generated for the knowledge worker to review and approve as a draft solution. Generating a first draft helps reduce the time barrier for knowledge workers. Editing a proposed solution is easier than creating one from scratch.

Additionally, newly hired knowledge workers who may need a deeper understanding of an environment, its products, and services can ask a question and, based upon prior knowledge and ticket information, generate a response posed as a possible solution. A solution doesn’t exist in this case, but one is generated based on the knowledge worker’s prompt and the LLM’s prior learning. There is no more waiting for a subject matter expert to pass down their guidance or for an approval process to ensure that the solution adheres to standards. The solution is generated from previously captured knowledge and information in the system of record. Real-time knowledge management is possible in the workflow of the knowledge worker.

Transformative = Create Knowledge From Facts And Procedures From Policies

LLMs excel at transforming data from one state into another. In the knowledge management use case, this means enabling any knowledge worker to be a knowledge-creation expert. Using an LLM, someone uncomfortable with writing can create a knowledge article from a set of bullets. LLMs are also excellent for summarization — for use cases such as turning a complicated policy document into a set of steps for an employee to perform to resolve a situation.

Over the past 10 years, organizations have emphasized documentation, including processes, policies, governance requirements, security, products, applications, etc. There is a wealth of information in different formats for specific needs. For example, a product document may be developed for consumers but has relevant troubleshooting information. Using an LLM and generative AI, a knowledge worker investigating a reported error can create a knowledge article from a product document with a workaround. The transformative capabilities make it easier for all knowledge workers to be creators of knowledge.

Machine Learning = Continuous Improvement With And Without Human Effort

Subject matter experts and technical writers typically spend significant time creating and perfecting knowledge only to see that knowledge slowly degrade in relevancy, eventually becoming unreliable and outdated. Knowledge was put on an update-countdown timer to combat the problem so that the subject matter experts would regularly review and update as needed. The update cycle did not ensure that knowledge was updated, however, as subject matter experts often just reset the timer or ignore it altogether, and the knowledge was automatically archived. In the modern organization, the rapid pace of change and innovation means that knowledge is constantly changing and that updates and improvements to knowledge need to happen within knowledge worker workflows every time knowledge is used.

When we look closely at the learning capabilities of generative AI, new knowledge is generated by combining and synthesizing information from various sources. When a user asks a question, the LLM processes the language and analyzes it to determine the most appropriate response by identifying key concepts and themes, recognizing relationships between different pieces of information, and drawing on preexisting knowledge to generate a relevant and coherent response. Once knowledge is surfaced, the human in the loop can review and provide feedback — not just the simple thumbs up or thumbs down but also by adding their experiences to improve the surfaced knowledge.

Synthesis = Training With Internal And External Content Without Human Effort

An essential aspect of knowledge management is the relevancy of content. An organization’s knowledge management efforts are only as effective as the quality of captured knowledge. If you take technical support as a use case, an LLM can be trained on publicly available knowledge articles published by product and software companies and on internally created knowledge that may reside in an ITSM knowledge module and records, such as previously occurring incidents, problems, and workarounds.

When organizations do not have an extensive knowledge base of reliable solutions, synthesizing publicly available data can help them improve their LLM training. Now, when you create a prompt to ask a question or are looking for an answer when troubleshooting a common problem, the LLM can provide access to internal knowledge sources and surface knowledge developed externally. The ability to choose specific sites and content can broaden the effectiveness of the internal knowledge base and create a better support experience for the technical analyst, improve knowledge transfer directly to end users, and increase in self-service success.

Conversational = Easy-To-Understand Language Improves End User Self-Service

Self-service has long been heralded as the holy-grail outcome of a successful knowledge management practice: Build a knowledge base with content for end users to help themselves and reduce the number of human-assisted support incidents, and use automation to build workflows to streamline common requests and push these to end users to minimize the effort required to fulfill those requests. While organizations employing modern, resilient operations have applied these initiatives successfully, end user adoption has yet to be what was expected or promised.

Conversational capabilities change the interaction for end users from searching and clicking to a much more intuitive question and response. Instead of surfacing written and stored content, the interaction becomes a real, conversationlike experience. The ability to create a tone in writing — for example, with empathy or in easy-to-understand terms — can make the content easier to understand and connect at the reader’s level of understanding. Conversationlike responses create a compelling pull for the customer; when it works how they expect it to and gets them back to doing their work more quickly, they will return to using self-service.

As Always, Exercise And Explore With Caution

LLMs are limited by the quality and accuracy of the data used for training and cannot understand the context. Generative AI responses are only as reliable as the information that the LLM has been trained on. Most organizations have data integrity problems. Therefore, it is vital for users to critically evaluate the provided information and seek confirmed sources when necessary.

It is clear to us and many others that generative AI can significantly impact an organization’s ability to create, improve, find, and transfer knowledge across the enterprise. Over the coming months, we will continue exploring and sharing these possibilities. With the pace of change, we could quickly accelerate how knowledge management works in a matter of months or weeks. We have already seen demonstrations of what the future looks like. It is crazy. It is wild. And as knowledge management enthusiasts, we can’t wait to see how it can help knowledge workers be more productive.

Let’s Connect

Have questions? That’s fantastic — let’s connect and continue the conversation! Please reach out to me through social media or request a guidance session. Follow my blogs and research at Forrester.com.

Knowledge Management, I’d Like To Introduce My New Friend, Generative AI