Data observability provides comprehensive visibility into the health of an organization’s data and systems, letting you promptly identify discrepancies, pinpoint root causes of related issues, and swiftly enforce corrective measures. This level of data awareness is critical for organizations who rely on accurate information for decision-making.

Data observability tools offer a holistic view of your organization’s data environment so your teams can fully understand and manage data assets. Understanding the data observability pillars and hierarchy and the types of tools available can help you implement a data observability framework for your organization. Here’s what you need to know.

Table of Contents

Toggle

What is Data Observability?

Data observability is a key part of data management that provides an in-depth understanding of the health and quality of an organization’s data across various enterprise systems and processes. It involves monitoring, managing, and maintaining data assets to ensure accuracy, validity, and reliability. The process gives a transparent view of data flows, revealing data inconsistencies and making it easier to troubleshoot and resolve data issues in real-time.

Importance of Data Observability

Data observability equips businesses with a deep understanding of how their data systems are working, providing insights into the performance, behavior, and interactions of data systems. It enables an organization to uncover and resolve data issues proactively. Here are some of the key reasons why data observability is critically important:

- Transparency: Visibility into the data flow, quality, and performance of an organization can instill stakeholders with an understanding of how data is generated, processed, and used. This builds trust and confidence in decision-making processes.

- Reliability: By ensuring the accuracy and trustworthiness of data, data observability boosts the reliability of information used for decision-making. It helps find and correct data errors or inconsistencies promptly, minimizing the risk of making decisions based on faulty or incomplete data.

- Efficiency: Facilitating the rapid detection and resolution of data issues reduces downtime associated with data-related issues. By closely monitoring data pipelines and systems, organizations can optimize performance, maintain operational continuity, and boost productivity.

- Collaboration: Shared understanding of data assets and processes promotes team collaboration and supports communication, knowledge sharing, and interdisciplinary cooperation, aiding teams in working together effectively towards common goals.

Data Observability vs. Data Monitoring

Data observability and data monitoring differ in their scope and goals—data observability takes a broader approach than traditional monitoring and alerting, providing a more thorough view of data systems. Data monitoring involves continuous surveillance and tracking of data flows and processes to maintain operational health and performance.

Data observability does more than mere surveillance; it employs measures to maintain high data quality, trustworthiness, and usability throughout its lifecycle. In addition, data observability includes aspects such as understanding data lineage, managing metadata, guaranteeing data governance, and supporting collaboration among stakeholders.

In short, monitoring informs you about the functionality of the system, while observability enables you to take a closer look into the underlying reasons for any malfunctions.

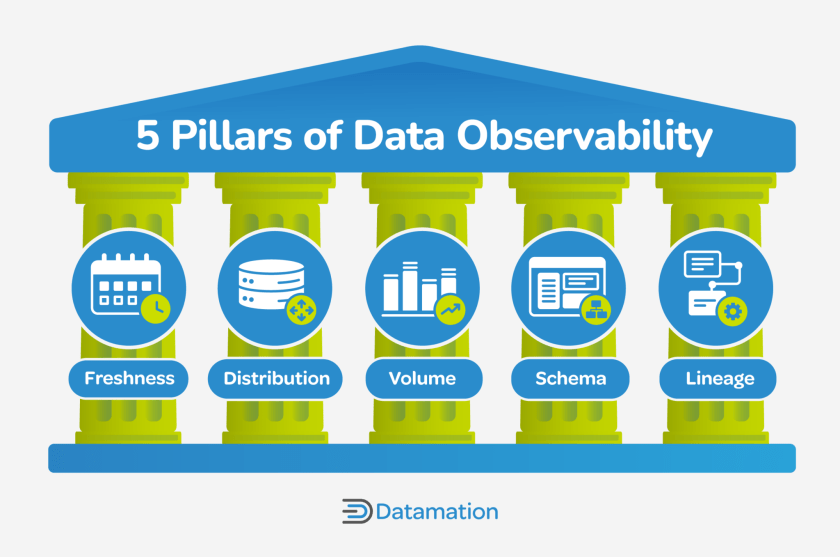

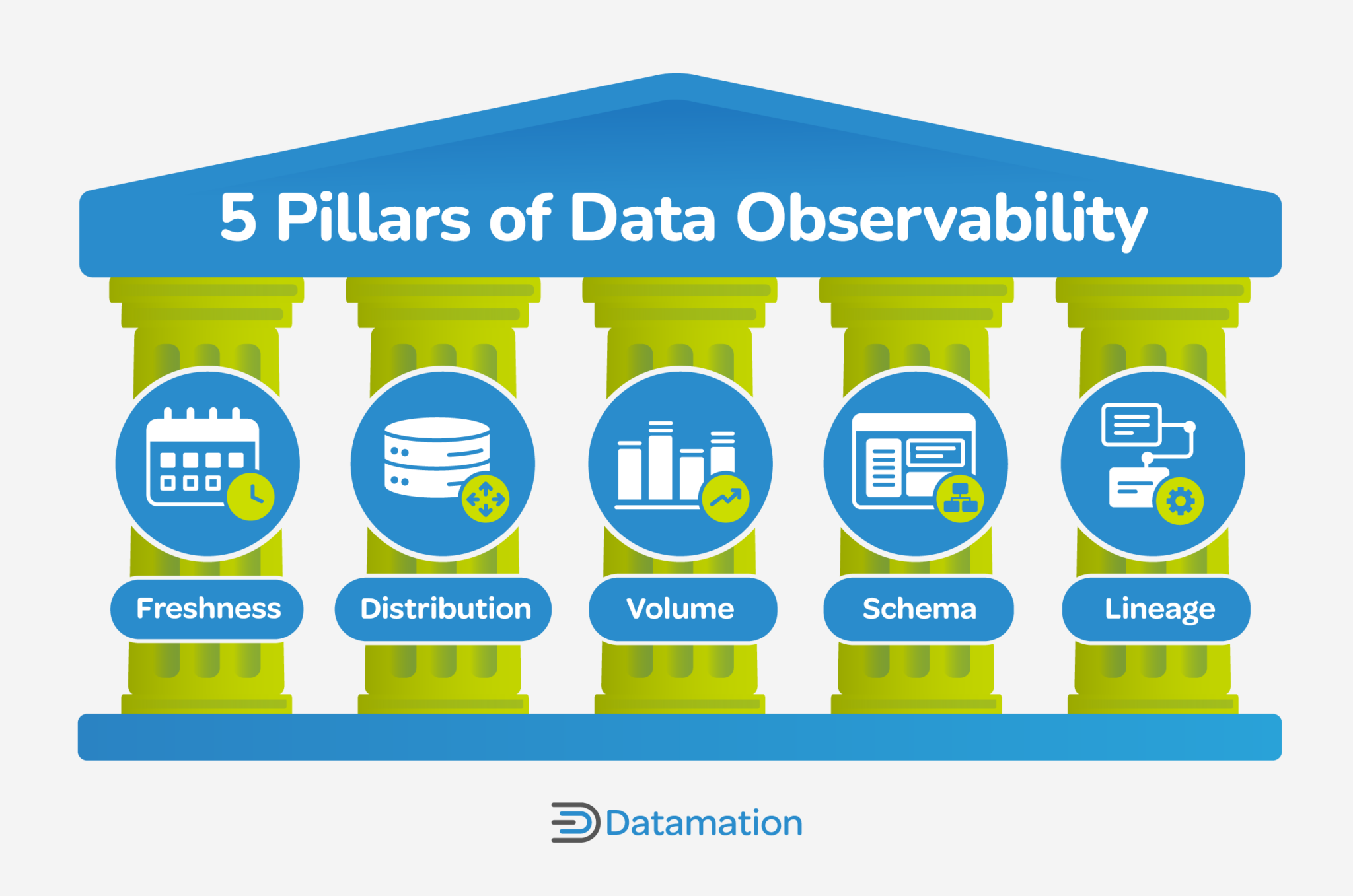

Data Observability: The Five Pillars

Data observability can be broken down into five pillars: freshness, distribution, volume, schema, and lineage. These five pillars collectively offer a thorough examination of data health and present vital insights into the quality and trustworthiness of your enterprise data.

The Five Pillars of Data Observability

Pillar 1: Freshness

Data freshness, or recency, measures the timeliness and frequency of data updates and pinpoints gaps in data updates, making sure that pipelines are functioning optimally. Freshness is paramount in decision-making, as outdated data can lead to erroneous conclusions and wasted resources.

Pillar 2: Distribution

Distribution is the way data is dispersed across different systems, locations, or platforms. It measures if data is reliable and accurate by matching it to the expected values. If data differs too much from the normal range, it may suggest a problem with the data source or quality.

Data observability tools can spot and notify you about distribution issues and help you correct them before they affect other parts of the pipeline. Data quality is an important part of distribution because it affects how well the data can serve its intended purpose. If the data is distributed across multiple systems or databases, it needs to be consistent, accurate, complete, and valid. Otherwise, the data may not be reliable.

Pillar 3: Volume

Assessing the quantity and completeness of data processed through various processes and pipelines can give insights into its health and reliability. It can be a critical indicator of whether data intake aligns with anticipated thresholds. Similar to distribution, volume ensures that the amount of data received is consistent with historical patterns. Significant deviations in the volume of data may indicate issues.

Pillar 4: Schema

Schemas determine how data is structured and organized in tables, columns, and views. Changes to the schema can cause issues or discrepancies, so monitoring who makes these changes and when is necessary for understanding the health of your data ecosystem.

Data observability verifies that data is organized consistently, is compatible across different systems, and maintains its integrity throughout its lifecycle. Establishing a clear process for who updates the schema and how they do it is a key aspect of a data observability strategy.

Pillar 5: Lineage

This data observability pillar focuses on tracing the impact of changes on data and explains where the data came from in case of issues. Lineage tracks the journey of data from its source to its destination, recording any changes along the way, including what, why, and how the data has been modified.

Lineage is typically presented visually to present a clear understanding of the data flow. It identifies the upstream sources and downstream ingestors affected by changes, as well as the teams generating and accessing the data. Advanced lineage also collects metadata about the data related to governance, business, and technical guidelines associated with specific data tables. This information serves as a single source of truth for everyone, promoting data consistency and reliability.

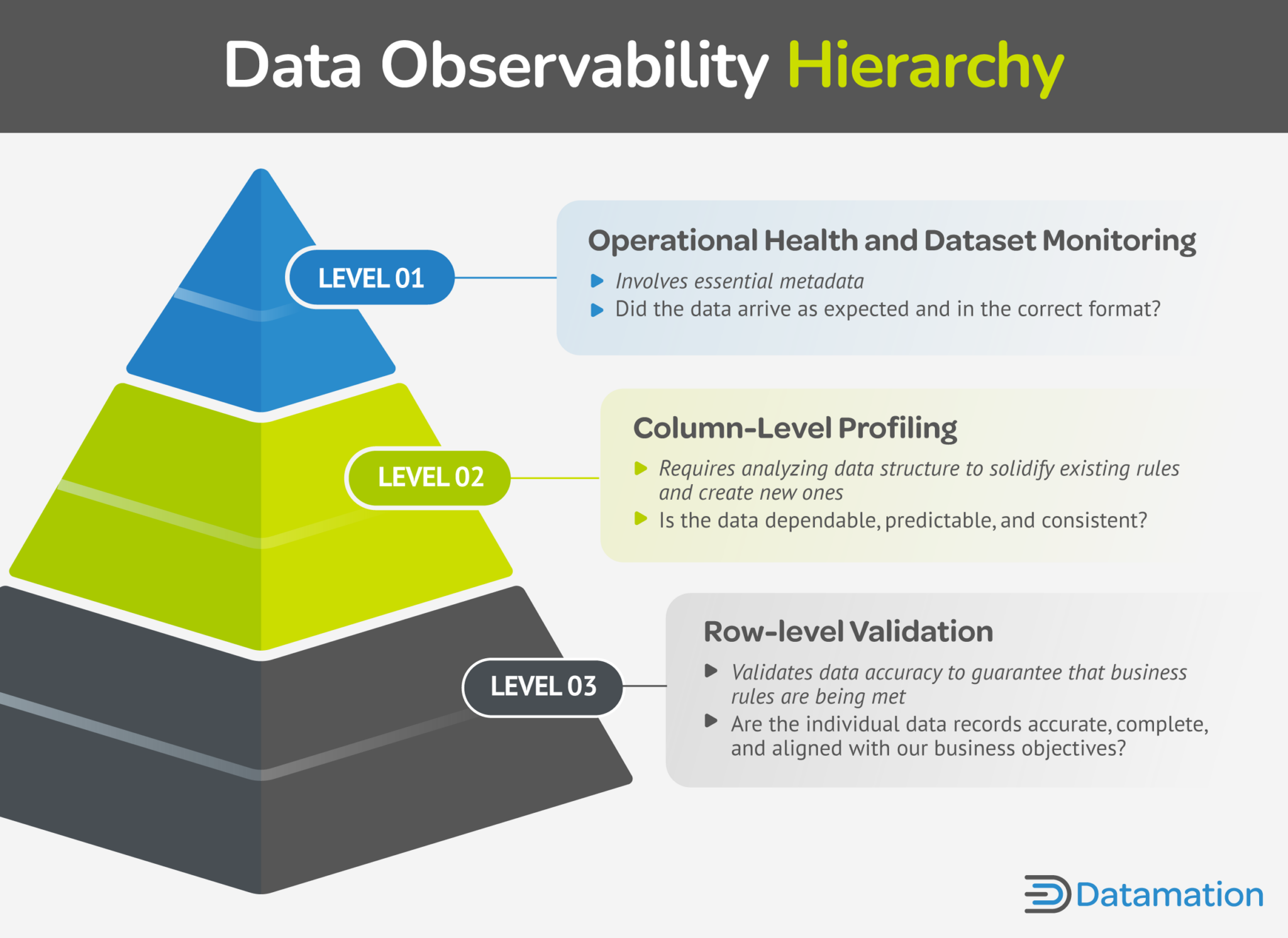

Understanding the Data Observability Hierarchy

The data observability hierarchy is a structured approach to monitoring and validating data quality and reliability. An integral part of the data observability process, it provides a systematic way to gain visibility into the state of data at different levels of granularity. By following this hierarchy, your organization can ensure the accuracy, reliability, and trustworthiness of your data at every level, ultimately enhancing the overall data observability process.

Data Observability Hierarchy

Level 1: Operational Health and Dataset Monitoring

This level involves essential metadata and gives a high-level overview of data operations, ensuring the timely arrival of data in the correct format. By keeping an eye on operational health and dataset, you can catch and resolve any issues early, which helps maintain the overall quality of your data.

Level 2: Column-Level Profiling

This level involves a detailed examination of specific data categories to solidify existing rules and create new ones. It involves checking if the data is dependable, predictable, and consistent while finding inconsistencies, outliers, or anomalies. This level of insight can refine the accuracy of your data analyses and predictions.

Level 3: Row-Level Validation

This level entails a microscopic examination of each individual piece of data against specific rules or conditions and verifies its correctness to make sure that business rules are being met. This helps you catch any errors or anomalies at the most granular level, making sure data is error-free and reliable.

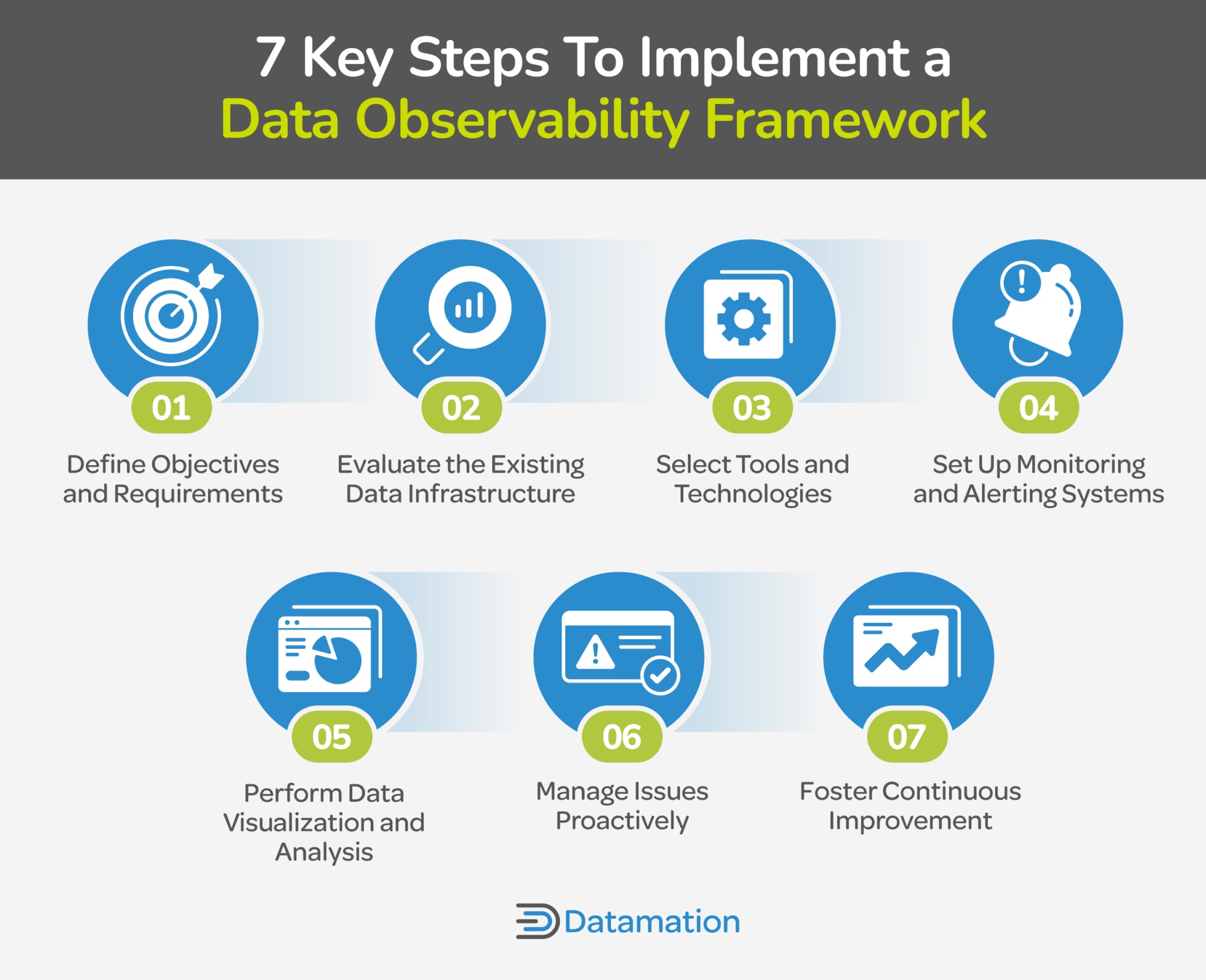

7 Key Steps To Implement a Data Observability Framework

Implementing a data observability framework is important to maintain the reliability, accessibility, and trustworthiness of your enterprise data assets. This framework consists of seven key steps designed to enhance data management practices, optimize data infrastructure, and support informed decision-making. By following these steps chronologically, your organization can effectively monitor your data health, detect anomalies, and maintain data quality.

- 【High Speed RAM And Enormous Space】16GB DDR4...

- 【Processor】Intel Celeron J4025 processor (2...

- 【Display】21.5" diagonal FHD VA ZBD anti-glare...

- 【Tech Specs】2 x SuperSpeed USB Type-A 5Gbps...

- 【Authorized KKE Mousepad】Include KKE Mousepad

- 【EFFICIENT PERFORMANCE】ACEMAGIC Laptop...

- 【16GB RAM & 512GB ROM】Featuring 16GB of DDR4...

- 【15.6" IMMERSIVE VISUALS】This 15.6 inch laptop...

- 【NO LATENCY CONNECTION】The laptop computer...

- 【ACEMAGIC CARE FOR YOU】 This slim laptop will...

7 Key Steps To Implement a Data Observability Framework

- Define Objectives and Requirements: Clearly outline the goals and needs of your data observability framework based on your business requirements. Define what you specifically aim to achieve to meet those objectives.

- Evaluate Existing Infrastructure: Assess your current enterprise data systems, processes, and tools. Determine strengths, weaknesses, and areas for improvement to inform the design of your observability framework.

- Select Tools and Technologies: Choose tools and technologies that align with your objectives and needs. Consider factors such as scalability, compatibility, and ease of use when selecting tools for monitoring, observability, analysis, and visualization.

- Set Up Monitoring and Alerting Systems: Establish monitoring and alerting mechanisms to track key metrics and indicators in your data infrastructure. Define thresholds and triggers for alerts to notify stakeholders of potential issues or anomalies in real-time.

- Perform Data Visualization and Analysis: Equip your team with intuitive data visualization and analysis tools. Let them explore and interpret data insights, encouraging informed decision-making throughout your organization.

- Manage Issues Proactively: Integrate measures to preemptively detect and resolve potential data infrastructure issues. This involves deploying automated alerts and checks to detect anomalies and deviations from expected data behavior, as well as establishing agile response protocols for swift resolution.

- Foster Continuous Improvement: Foster a culture of ongoing refinement of your data observability framework. Regularly review processes, tools, and practices to adapt to changing business needs, technological advancements, and data challenges; encourage feedback and prioritize learning to ensure the effectiveness and relevance of your observability framework over time.

3 Top Data Observability Tools

There are many data observability tools that can deliver visibility into your data pipelines and business systems and processes, enabling anomaly detection, maintaining data quality, and fueling informed decision-making. From real-time monitoring and alerting to comprehensive data analysis and visualization, the top data observability tools offer a range of functionalities to help organizations maximize the value of their data assets.

Dynatrace

Dynatrace provides observability, application performance monitoring (APM), and digital experience capabilities. It comes with full-stack visibility across cloud and hybrid environments, and continuous auto-discovery of hosts. It also automates monitoring and troubleshooting, allowing data teams to identify and resolve data-related issues promptly and focus on strategic projects.

By monitoring the digital experience using Dynatrace, teams gain insights into user interactions and can fine-tune applications based on real-time feedback. This data observability tool integrates with multiple third-party tools, offers extensive customer support, and can be configured and deployed quickly.

Due to its extensive featureset, Dynatrace has a steep learning curve. However, once mastered, it can be a valuable addition to the company, providing powerful tools for monitoring and streamlining performance across your entire IT environment.

SolarWinds Observability

SolarWinds Observability offers a complete view across multiple aspects of your IT infrastructure. It consolidates and analyzes data from logs, metrics, traces, database queries, and user experiences, establishing a unified source of truth. This data observability tool employs automated instrumentation and dependency mapping, presenting a holistic representation of the application and server interactions. As a result, data teams can visualize and consistently analyze business service relationships, component variations, and dependencies.

In addition, this tool supports open-source frameworks and seamlessly integrates with third-party applications, further increasing its versatility and compatibility with diverse IT environments.

While SolarWinds Observability is a powerful tool, it requires a high level of expertise to manage. Its complex nature is something to consider, but the sophisticated features and AI enhancements it provides can make the effort worthwhile.

Datadog Observability Platform

The Datadog Observability Platform offers end-to-end visibility into the health and performance of your entire technology stack. It unifies metrics, traces, and logs to simplify monitoring and managing complex systems. It can also monitor cloud, hybrid, or multi-cloud environments, as well as on-premises servers.

This tool stands out due to its ability to integrate with over 700 technologies and services, giving a 360-degree view of the infrastructure. Additionally, Datadog has cutting edge features like machine learning-based alerts and anomaly detection that pinpoint issues in the IT ecosystem, applications, and services, reducing the time needed for resolution.

Like other data observability tools, the Datadog Observability Platform has some drawbacks. For instance, you need to purchase a separate support plan to contact the customer support team. However, its advanced capabilities, such as AI-powered anomaly detection and extensive third-party integrations, make it a strong contender among data observability tools.

Frequently Asked Questions (FAQs)

Why Do You Need Data Observability?

You need data observability because it ensures the transparency, reliability, and accountability of your data pipelines and processes. It aids your organization in monitoring data quality, detecting anomalies or errors in real-time, troubleshooting issues quickly, and maintaining trust in your data-driven decision-making processes.

What is the Difference Between Observability and Data Observability?

- [High Speed RAM And Enormous Space] 64GB...

- [Processor] Intel Core i7-13700 (16 Cores, 24...

- [Tech Specs] 1 x USB 3.2 Type-C, 4 x USB 3.2...

- [Operating System] Windows 11 Home - Beautiful,...

- AMD Ryzen 5 3600 6-Core 3.6 GHz (4.2 GHz Turbo)...

- GeForce RTX 3060 12GB GDDR6 Graphics Card (Brand...

- 802.11AC | No Bloatware | Graphic output options...

- Heatsink & 3 x RGB Fans | Powered by 80 Plus Gold...

- 1 Year Warranty on Parts and Labor | Lifetime Free...

- 【Excellent performance】 Laptop is equipped...

- 【Do Your Tasks Easily】 Laptop computer comes...

- 【Amazing Visuals】 The 17.3-inch laptop...

- 【Poweful Cooling System】Laptops are equipped...

- 【External Ports Design】Notebook computer comes...

Data observability is a subset of observability that concentrates on tracking and comprehending data quality and behavior within systems or pipelines. In general, observability pertains to the ability to understand a system’s internal state based on its external outputs, like log files or error messages.

What is AI Observability?

AI observability offers deep insights into the performance and behavior of machine learning (ML) models, guaranteeing their effectiveness and reliability. It aids in compliance reporting and system optimization, detects biases and limitations, and supports efficient resource utilization. It is closely tied to data observability, as the quality of data directly influences AI performance. Together, they provide a comprehensive view of your data ecosystem, facilitating the creation of responsible AI models.

Bottom Line: Get a Broader Perspective With Data Observability

Understanding data observability opens doors to a broader perspective on data management and utilization. It emphasizes the significance of maintaining data integrity, accessibility, and optimization. By embracing data observability, you can take a proactive approach to data governance, enabling you to detect anomalies and anticipate trends. This understanding promotes a culture of collaboration and innovation within your organization, as stakeholders gain a shared appreciation for the value and potential of your data assets.

Implementing a data observability framework and leveraging reliable data observability tools empowers your organization to address issues swiftly, preserve data integrity, uncover valuable insights, and make effective decisions, contributing to overall business success.

Find out the trusted data observability tools today and what they have to offer by reading our article on top data observability tools of 2024.