Investment Thesis

I believe, buried in recent conference transcripts and news articles, Advanced Micro Devices, Inc. (NASDAQ:AMD) is making strong developments in their Nvidia Corporation (NVDA) CUDA competitor that are set to change the way GPU purchasing decisions are made at major Hyperscalers. Nvidia, a competitor of AMD, controls a large portion of the current GPU market with their CUDA technology, with their flagship software being able to sync multiple GPUs together so they can run in sync to create one large computing module for large language model (“LLM”) training and inferencing.

To disrupt NVIDIA’s dominance, AMD plans to use their open-source software alternatives on top of their new MI300 series, to attract companies looking for other options. With the AI GPU Data center market currently projected to grow to $400 billion by 2027, AMD has a unique opportunity. Utilized correctly, AMD’s new open-source solutions will help them strengthen their market share to Nvidia, therefore continuing to present themselves as a strong buy for investors (rating upgrade).

Why I Am Doing Follow-Up Coverage

I last reported on AMD before their Q4 2023 earnings report, where I argued that new 2.5/3D chips made by AMD were uniquely set to help the company make strong headway into the GPU market. Since then, the firm has reported a strong quarter (I talk about the key parts below).

The big point I wanted to point out, however, is that the firm raised their 2024 MI 300X sales guidance from $2 billion previously to $3.5 billion now. As I covered in my Q4 earnings preview, I thought $2 billion (and $3.5 billion for that matter) are conservative. But that’s not the point of this follow-up coverage.

My goal here is to explain how they plan to hit these numbers and actually disrupt Nvidia (who controls by some measures over 90% (Wells Fargo believes 98%) of the key AI Data Center GPU market). I believe their next leg up has nothing to do with the chips they make, actually (even though chips are necessary). The next leg of growth comes from getting the market to switch from being highly reliant on CUDA to sync GPUs. Once this happens, I believe the market share growth will accelerate. Recent industry trends indicate that these moves could happen soon, with both AMD customers and competitors working on CUDA alternatives. I expect AMD to be a part of this too.

Background: What Is CUDA?

CUDA (Compute Unified Device Architecture), developed by a team led by Ian Buck at Nvidia in 2006, is a parallel computing platform and application programming interface ((API)) model created by Nvidia. This platform increases computing performance by using a graphics processing unit (GPU), revolutionizing the way software developers use GPUs for general-purpose processing, a technique known as GPGPU (General-Purpose computing on Graphics Processing Units).

Originally designed for scientific computing, the use of CUDA expanded around 2015 when companies started utilizing its power for neural networks. CUDA allows for the development, optimization, and deployment of applications across various systems from embedded devices to supercomputers. This provides developers with libraries, compiler directives, and extensions to standard programming languages such as C, C++, Fortran, and Python. By incorporating parallelism into applications, these support features work to speed up computational tasks.

CUDA’s success comes from its ability to provide direct access to the GPU's virtual instruction set and parallel computational elements, allowing for the execution of compute kernels that simultaneously perform numerous calculations. This has led to the widespread adoption of the CUDA. This technology is used across multiple industries, including high-performance computing, deep learning, and scientific research. As mentioned above, part of CUDA’s expansion was based around its use of neural networks. CUDA enables the rapid training of these networks, significantly reducing computation time from months to days or even hours in some cases.

Despite the emergence of competing technologies like OpenCL, CUDA has remained the preferred choice for GPU-accelerated computing, particularly on Nvidia’s GPUs, due to its performance efficiency and the comprehensive ecosystem Nvidia has built around it. While CUDA is the current preferred choice, I predict AMD will soon change this.

Q4 Was Strong, Laying The Groundwork To Disrupt

The fourth quarter of 2023 was promising for AMD, allowing them to secure their foundation to challenge Nvidia's CUDA dominance. AMD reported a revenue of $6.2 billion, a 10% year-over-year growth, driven significantly by the Data Center and Client segments.

Lisa Su, AMD’s CEO, spoke on the performance of the Data Center segment, stating:

Data Center sales accelerated significantly throughout the year…driven by the ramp of Instinct AI accelerators and robust demand for EPYC server CPUs across cloud, enterprise, and AI customers – Q4 conference Call.

This notable growth in revenue is not only credited to their Data Center segment, but also their competitive pricing and innovative technology, which are persuading companies to consider alternatives to the CUDA framework. I think this is the first phase of how they get customers to switch. First, get clients to install their hardware. Then present to them a new system that is more accommodative than CUDA.

Lisa Su also pointed out the rapid adoption of AMD's AI accelerators, “Customer deployments of our Instinct GPUs continue accelerating, with MI300 now tracking to be the fastest revenue ramp of any product in our history.” With revenue jumps and increasing customer deployment, this indicates a strong market response and promising potential for future growth. One of the buyers of AMD’s technologies is Meta.

How AMD Plans To Disrupt CUDA

As read above, Nvidia’s CUDA presents itself as a strong competitor. To challenge this, AMD plans to cultivate an open-source equivalent to CUDA (that runs on HIP/ROCm). This equivalent aims to create an environment where both NVIDIA and AMD GPUs can operate seamlessly within the same system, reducing GPU platform barriers. To accelerate the development of this technology, AMD has invested in a project that led to the development of a drop-in CUDA replacement on the ROCm platform, which AMD funded to facilitate the seamless execution of CUDA-enabled software on AMD's hardware. On top of this, AMD has also partnered with companies like Google and Intel, to create competitive hardware solutions.

Mark Papermaster, AMD’s Chief Technology Officer, mentioned the significance of their ROCm software in enabling a vendor-agnostic environment that can translate high-level frameworks across different hardware platforms. He stated:

ROCm, as I said earlier, is our software enablement stack. And it's critical because when customers run, they program often at a high level and framework… And you have to optimize it to really deliver that value, that total cost of ownership -Investor Webinar Conference.

ROCm optimizes performance and user experience and makes it so that companies are not bound to a single hardware vendor. This approach challenges CUDA's proprietary nature.

To demonstrate AMD’s long-term investment in GPU technology, Jean Hu, AMD’s CFO, highlighted the success of their MI300 series. She stated:

Yes we literally launched MI300 last December… with like really strong support from cloud customers that we have Microsoft Meta Oracle and OEMs Dell SuperMicro HPE and also the whole ecosystem and our team is super focused on aggressively ramping into production – Morgan Stanley Investor Conference.

Hu focused on the quick adoption and production of the MI300 series to showcase AMD’s market penetration abilities. These are all companies that are making purchasing decisions away from Nvidia (the default choice in this market). I am encouraged.

Valuation

As the AI sector as a whole is expected to experience significant growth, AMD shows serious potential within this market. Estimates suggest the AI data center market could reach a total addressable market ((TAM)) of $400 billion by 2027. If AMD can successfully challenge competitors like Nvidia, they open up an opportunity to capture a large portion of this market share (especially given that Nvidia owns almost all of it right now – the sky is the limit in a way).

This market growth is heavily based on the integration of AI solutions in fields such as automotive, IT & telecom, healthcare as a part of their respective AI data center markets. As of now, North America leads the AI market, but areas like Asia Pacific are predicted to experience high-growth rates as well. AMD can ride both of these trends well.

- Sturdy and Durable: This OROPY wall mounted...

- Sleek Industrial Design: With its simple...

- Optimized Space Utilization: Expand your storage...

- Convenience at Your Fingertips: Hang your daily...

- Versatile Functionality: This multi-functional...

- 【Industrial Clothing Rack】 The clothing racks...

- 【Sturdy & Durable】 Our clothes racks are made...

- 【Height Adjustable】 The height of the lower...

- 【Multifunction Closet Rack】 Wall clothes rack...

- 【Multi-Scene Use】 Dimension: 115” W x87.5”...

- 【Safer Size/Style】: Whole sconces are UL...

- 【Outstanding Details】: Our high-quality black...

- 【NOTE】: Our bar lighting wall sconce include...

- 【Wide Application】: Vintage wall light...

- 【Tips】: As the tube bulb is a bit special, it...

AMD has recognized the expanding AI market and has adjusted their market growth estimates accordingly. Initially, AMD estimated the data center AI market would grow by 50% annually, reaching $150 billion in 2027. However, based on the pace of market adoption, AMD adjusted their estimate, projecting a 70% annual growth rate and a market value of $400 billion in 2027. As for AMD, they claimed their market potential for the AI accelerators to reach $45 billion. Given this, analysts predict AMD will capture at least a 20% market share.

If AMD can capture 20% market share of the AI accelerators market, this could unlock $9 billion in revenue through the MI 300X series. I previously argued that there is up to $9.5 billion in revenue possible through the MI 300X series this year. I have dropped my estimate to $9 billion now given that the ASP (average selling price) of the MI 300X is lower at about 10,000-$15,000/unit vs. my expectation for $20,000/unit.

Given $9 billion in revenue, if the company had their current roughly 10x price/sales multiple applied to this revenue, this could represent $90 billion in market cap upside for the stock, or 32.66% upside from where the stock is today.

Why I Do Not Think This Is Priced In & Updates From My Last Valuation

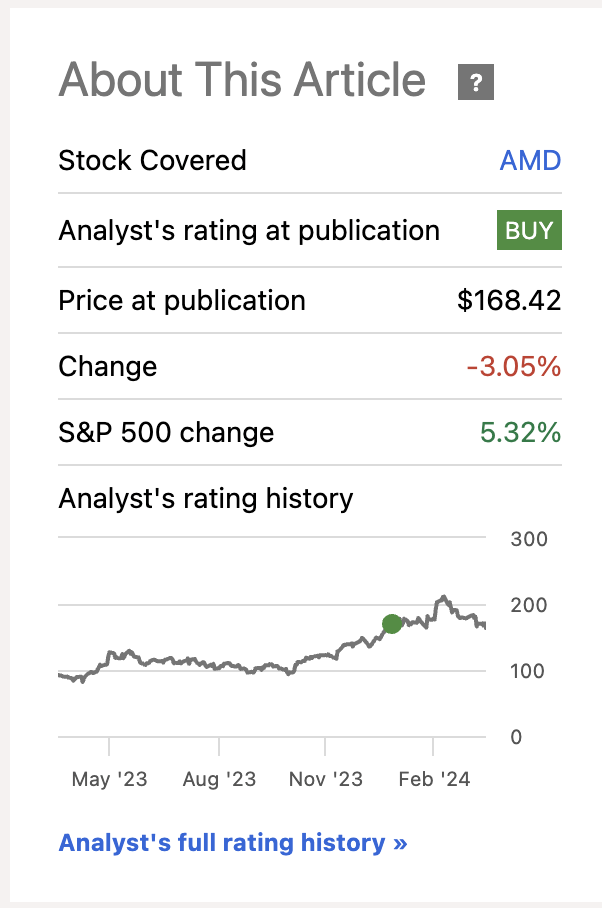

Last time I wrote about AMD, I said the stock had about 20% upside from the roughly $168/share the stock was trading at going into earnings. Now, post earnings (and the MI 300X guidance upgrade that we saw from the company) the stock is actually down 3.05%. We now have a company with better growth forecasts than before, and the stock has gone down. I think this is incredible.

AMD Performance Since Last Publication (Seeking Alpha)

On top of this, the company’s PEG ratio is 1.00 vs. sector median of 1.94. I’m really encouraged by the upside opportunity here given that the company now has better growth prospects, a strategy to disrupt the biggest hindrance for future growth (CUDA) and had a far lower than sector median PEG ratio.

Risks

As a company in such a developing market, it is important to always maintain a competitive edge. Although Nvidia is one of the leading figures in this market, this doesn’t mean they are flat-footed. To accelerate production of their GPUs, Nvidia developed an AI software called ChipNeMo. This software is a large language model, able to respond to any questions that arise during production. Producing GPUs sometimes requires thousands of people, so with a technology like ChipNeMo, the risk of confusion or miscommunication is reduced. By implementing this software, Nvidia has already seen an increase in GPU production efficiency.

In response to Nvidia’s advancements in GPU production, AMD is responding with even stronger innovation in their own product line and open-source initiatives. Jean Hu, CFO of AMD, acknowledged the significance of competition in technology markets, especially one as large and diverse as the AI and GPU market. She pointed out the evolving open-source ecosystem, suggesting a shift away from a singular dependency on CUDA:

Especially a lot of open source ecosystems continue to evolve. It's not like everybody writes their model on CUDA. There are more and more people writing models on the open-source ecosystem.” AMD’s focus on open-source solutions like ROCm has been a key part of their strategy to provide better Total Cost of Ownership ((TCO)) for customers. – Morgan Stanley TMT Conference.

In January 2023, the MI300 was released and, according to Jean Hu, the “MI300… it's actually a chiplet design, have multiple chips and packages it together… AMD actually is the leader and innovates the very early on in chiplet design.”

She also mentioned that since introducing the MI300, Nvidia has sped up production of their own chips. She then went on to ensure that:

“Since we introduced MI300 we have seen Nvidia continue to accelerate the cadence of the product introduction. You should expect us to also do the same.”

China Is The Other Elephant In The Room

The other major concern I’ve noticed that investors are worried about is the bans by the Chinese government on the sales of AMD chips to both state enterprises and to telecom carriers. While AMD currently gets 15% of their revenue from China, new chips like the MI 300X (as I said earlier) will likely be unable to be sold in China due to export restrictions from the U.S. government. This means the upside in AMD was not going to be found in China regardless. I expect strong sales of the MI 300X series using the new open-source alternatives to CUDA will be more than enough to offset lost China sales.

Bottom Line

With a successful release of AMD’s MI300 chip and their Q4 performance, I believe AMD was well on their way to challenging Nvidia’s dominant position in the GPU market. New developments on their open-source CUDA front further accelerate this. By creating a CUDA alternative, AMD will allow companies to use different types of GPU technologies within the same platform. I’m optimistic. Considering investments made by AMD along with the projected growth of the AI market, I predict they are likely to capture a significant share of the future market.

While the company is dealing with continuous competition risks from Nvidia (their new chips are undoubtedly powerful) and the company faces a drop-off in sales from China in lieu of new telecom and government use case bans, I think these will be noise eventually. The company (due to restrictions from the commerce department) likely already could not sell the chips that will make up the future of the company to customers in China. These bans in China will simply help accelerate the transition for the firm to lean into this new strategy.

I believe AMD now represents a strong buy (rating upgrade) for investors keen on tapping into the burgeoning AI and GPU sectors from a disruptor’s angle.