This is a guest post by Siva Manickam and Prahalathan M from Vyaire Medical Inc.

Vyaire Medical Inc. is a global company, headquartered in suburban Chicago, focused exclusively on supporting breathing through every stage of life. Established from legacy brands with a 65-year history of pioneering breathing technology, the company’s portfolio of integrated solutions is designed to enable, enhance, and extend lives.

At Vyaire, our team of 4,000 pledges to advance innovation and evolve what’s possible to ensure every breath is taken to its fullest. Vyaire’s products are available in more than 100 countries and are recognized, trusted, and preferred by specialists throughout the respiratory community worldwide. Vyaire has 65-year history of clinical experience and leadership with over 27,000 unique products and 370,000 customers worldwide.

Vyaire Medical’s applications landscape has multiple ERPs, such as SAP ECC, JD Edwards, Microsoft Dynamics AX, SAP Business One, Pointman, and Made2Manage. Vyaire uses Salesforce as our CRM platform and the ServiceMax CRM add-on for managing field service capabilities. Vyaire developed a custom data integration platform, iDataHub, powered by AWS services such as AWS Glue, AWS Lambda, and Amazon API Gateway.

In this post, we share how we extracted data from SAP ERP using AWS Glue and the SAP SDK.

Business and technical challenges

Vyaire is working on deploying the field service management solution ServiceMax (SMAX, a natively built on SFDC ecosystem), offering features and services that help Vyaire’s Field Services team improve asset uptime with optimized in-person and remote service, boost technician productivity with the latest mobile tools, and deliver metrics for confident decision-making.

A major challenge with ServiceMax implementation is building a data pipeline between ERP and the ServiceMax application, precisely integrating pricing, orders, and primary data (product, customer) from SAP ERP to ServiceMax using Vyaire’s custom-built integration platform iDataHub.

Solution overview

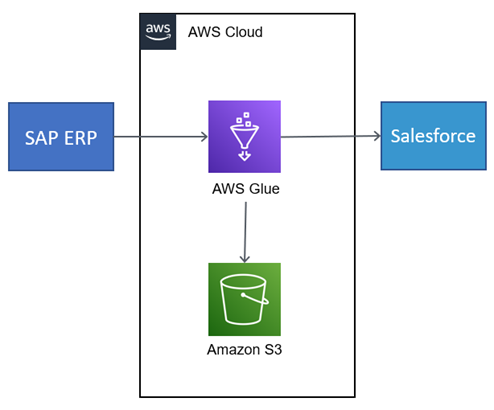

Vyaire’s iDataHub powered by AWS Glue has been effectively used for data movement between SAP ERP and ServiceMax.

AWS Glue a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning (ML), and application development. It’s used in Vyaire’s Enterprise iDatahub Platform for facilitating data movement across different systems, however the focus for this post is to discuss the integration between SAP ERP and Salesforce SMAX.

The following diagram illustrates the integration architecture between Vyaire’s Salesforce ServiceMax and SAP ERP system.

In the following sections, we walk through setting up a connection to SAP ERP using AWS Glue and the SAP SDK through remote function calls. The high-level steps are as follows:

- Clone the PyRFC module from GitHub.

- Set up the SAP SDK on an Amazon Elastic Compute Cloud (Amazon EC2) machine.

- Create the PyRFC wheel file.

- Merge SAP SDK files into the PyRFC wheel file.

- Test the connection with SAP using the wheel file.

Prerequisites

For this walkthrough, you should have the following:

- An AWS account.

- The Linux version of NW RFC SDK from a SAP licensed source. For more information, refer to Download and Installation of NW RFC SDK.

- The AWS Command Line Interface (AWS CLI) configured. For instructions, refer to Configuration basics.

Clone the PyRFC module from GitHub

For instructions for creating and connecting to an Amazon Linux 2 AMI EC2 instance, refer to Tutorial: Get started with Amazon EC2 Linux instances.

The reason we choose Amazon Linux EC2 is to compile the SDK and PyRFC in a Linux environment, which is compatible with AWS Glue.

At the time of writing this post, AWS Glue’s latest supported Python version is 3.7. Ensure that the Amazon EC2 Linux Python version and AWS Glue Python version are the same. In the following instructions, we install Python 3.7 in Amazon EC2; we can follow the same instructions to install future versions of Python.

- In the bash terminal of the EC2 instance, run the following command:

sudo apt install python3.7- Log in to the Linux terminal, install git, and clone the PyRFC module using the following commands:

ssh -i "aws-glue-ec2.pem" [email protected]

mkdir aws_to_sap

sudo yum install git

git clone https://github.com/SAP/PyRFC.gitSet up the SAP SDK on an Amazon EC2 machine

To set up the SAP SDK, complete the following steps:

- Download the

nwrfcsdk.zipfile from a licensed SAP source to your local machine. - In a new terminal, run the following command on the EC2 instance to copy the

nwrfcsdk.zipfile from your local machine to theaws_to_sapfolder.

scp -i "aws-glue-ec2.pem" -r "c:\nwrfcsdk\nwrfcsdk.zip" [email protected]:/home/ec2-user/aws_to_sap/- Unzip the

nwrfcsdk.zipfile in the current EC2 working directory and verify the contents:

- Function Test: Only printer printheads that have...

- Stable Performance: With stable printing...

- Durable ABS Material: Our printheads are made of...

- Easy Installation: No complicated assembly...

- Wide Compatibility: Our print head replacement is...

- PIN YOUR ADVENTURES: Turn your travels into wall...

- MADE FOR TRAVELERS: USA push pin travel map...

- DISPLAY AS WALL ART: Becoming a focal point of any...

- OUTSTANDING QUALITY: We guarantee the long-lasting...

- INCLUDED: Every sustainable US map with pins comes...

unzip nwrfcsdk.zip

- Configure the SAP SDK environment variable

SAPNWRFC_HOMEand verify the contents:

export SAPNWRFC_HOME=/home/ec2-user/aws_to_sap/nwrfcsdk

ls $SAPNWRFC_HOMECreate the PyRFC wheel file

Complete the following steps to create your wheel file:

- On the EC2 instance, install Python modules cython and wheel for generating wheel files using the following command:

pip3 install cython, wheel- Navigate to the PyRFC directory you created and run the following command to generate the wheel file:

python3 setup.py bdist_wheelVerify that the pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl wheel file is created as in the following screenshot in the PyRFC/dist folder. Note that you may see a different wheel file name based on the latest PyRFC version on GitHub.

Merge SAP SDK files into the PyRFC wheel file

To merge the SAP SDK files, complete the following steps:

- Unzip the wheel file you created:

cd dist

unzip pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl

- Copy the contents of lib (the SAP SDK files) to the pyrfc folder:

cd ..

cp ~/aws_to_sap/nwrfcsdk/lib/* pyrfcNow you can update the rpath of the SAP SDK binaries using the PatchELF utility, a simple utility for modifying existing ELF executables and libraries.

- Install the supporting dependencies (gcc, gcc-c++, python3-devel) for the Linux utility function PatchELF:

sudo yum install -y gcc gcc-c++ python3-develDownload and install PatchELF:

wget https://download-ib01.fedoraproject.org/pub/epel/7/x86_64/Packages/p/patchelf-0.12-1.el7.x86_64.rpm

sudo rpm -i patchelf-0.12-1.el7.x86_64.rpm

- Run patchelf:

find -name '*.so' -exec patchelf --set-rpath '$ORIGIN' {} \;- Update the wheel file with the modified pyrfc and dist-info folders:

zip -r pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl pyrfc pyrfc-2.5.0.dist-info

- Copy the wheel file

pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whlfrom Amazon EC2 to Amazon Simple Storage Service (Amazon S3):

aws s3 cp /home/ec2-user/aws_to_sap/PyRFC/dist/ s3://<bucket_name> /ec2-dump --recursiveTest the connection with SAP using the wheel file

The following is a working sample code to test the connectivity between the SAP system and AWS Glue using the wheel file.

- On the AWS Glue Studio console, choose Jobs in the navigation pane.

- Select Spark script editor and choose Create.

- Overwrite the boilerplate code with the following code on the Script tab:

import os, sys, pyrfc

os.environ['LD_LIBRARY_PATH'] = os.path.dirname(pyrfc.__file__)

os.execv('/usr/bin/python3', ['/usr/bin/python3', '-c', """

from pyrfc import Connection

import pandas as pd

## Variable declarations

sap_table = '' # SAP Table Name

fields = '' # List of fields required to be pulled

options = '' # the WHERE clause of the query is called "options"

max_rows = '' # MaxRows

from_row = '' # Row of data origination

try:

# Establish SAP RFC connection

conn = Connection(ashost='', sysnr='', client='', user='', passwd='')

print(f“SAP Connection successful – connection object: {conn}”)

if conn:

# Read SAP Table information

tables = conn.call("RFC_READ_TABLE", QUERY_TABLE=sap_table, DELIMITER='|', FIELDS=fields, OPTIONS=options, ROWCOUNT=max_rows, ROWSKIPS=from_row)

# Access specific row & column information from the SAP Data

data = tables["DATA"] # pull the data part of the result set

columns = tables["FIELDS"] # pull the field name part of the result set

df = pd.DataFrame(data, columns = columns)

if df:

print(f“Successfully extracted data from SAP using custom RFC - Printing the top 5 rows: {df.head(5)}”)

else:

print(“No data returned from the request. Please check database/schema details”)

else:

print(“Unable to connect with SAP. Please check connection details”)

except Exception as e:

print(f“An exception occurred while connecting with SAP system: {e.args}”)

"""])

- On the Job details tab, fill in mandatory fields.

- In the Advanced properties section, provide the S3 URI of the wheel file in the Job parameters section as a key value pair:

- Key –

--additional-python-modules - Value –

s3://<bucket_name>/ec2-dump/pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl(provide your S3 bucket name)

- Key –

- Save the job and choose Run.

Verify SAP connectivity

Complete the following steps to verify SAP connectivity:

- When the job run is complete, navigate to the Runs tab on the Jobs page and choose Output logs in the logs section.

- Choose the job_id and open the detailed logs.

- Observe the message

SAP Connection successful – connection object: <connection object>, which confirms a successful connection with the SAP system. - Observe the message Successfully extracted data from SAP using custom RFC – Printing the top 5 rows, which confirms successful access of data from the SAP system.

Conclusion

AWS Glue facilitated the data extraction, transformation, and loading process from different ERPs into Salesforce SMAX to improve Vyaire’s products and its related information visibility to service technicians and tech support users.

In this post, you learned how you can use AWS Glue to connect to SAP ERP utilizing SAP SDK remote functions. To learn more about AWS Glue, check out AWS Glue Documentation.

About the Authors

- 100% official

- Very practical with multiple pockets

- Handle on pencil case for easy carrying

- Material: Polyester

- Dimensions: 26 x 14 x 8.5 cm

- FUNNY COIN&BAG: You will get a coin and jewelry...

- NOVELTY DESIGN: Perfect copy the original coins,...

- LUCKY POUCH: The feel of the flannelette bag is...

- SIZE: Fine quality and beautiful packing. Coin...

- PERFECT GIFT: 1*Coin with Exquisite Jewelry Bag....

Siva Manickam is the Director of Enterprise Architecture, Integrations, Digital Research & Development at Vyaire Medical Inc. In this role, Mr. Manickam is responsible for the company’s corporate functions (Enterprise Architecture, Enterprise Integrations, Data Engineering) and produce function (Digital Innovation Research and Development).

Prahalathan M is the Data Integration Architect at Vyaire Medical Inc. In this role, he is responsible for end-to-end enterprise solutions design, architecture, and modernization of integrations and data platforms using AWS cloud-native services.

Deenbandhu Prasad is a Senior Analytics Specialist at AWS, specializing in big data services. He is passionate about helping customers build modern data architecture on the AWS Cloud. He has helped customers of all sizes implement data management, data warehouse, and data lake solutions.

![United States Travel Map Pin Board | USA Wall Map on Canvas (43 x 30) [office_product]](https://m.media-amazon.com/images/I/41O4V0OAqYL._SL160_.jpg)